Feature toggles enable developers to activate or deactivate specific functionalities within an application without deploying new code, facilitating continuous delivery and reducing risk. A/B testing tools allow marketers and product teams to compare multiple versions of a feature or user interface by exposing different user segments to each variant and analyzing performance metrics. Combining feature toggles with A/B testing provides precise control over feature rollout while gathering data-driven insights to optimize user experience and business outcomes.

Table of Comparison

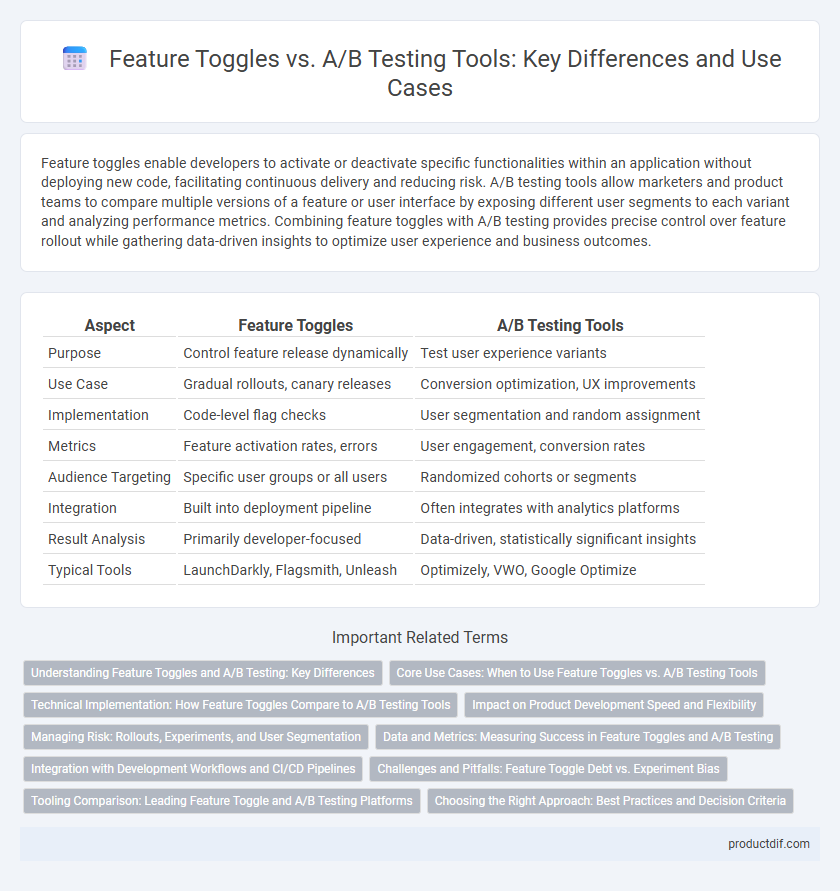

| Aspect | Feature Toggles | A/B Testing Tools |

|---|---|---|

| Purpose | Control feature release dynamically | Test user experience variants |

| Use Case | Gradual rollouts, canary releases | Conversion optimization, UX improvements |

| Implementation | Code-level flag checks | User segmentation and random assignment |

| Metrics | Feature activation rates, errors | User engagement, conversion rates |

| Audience Targeting | Specific user groups or all users | Randomized cohorts or segments |

| Integration | Built into deployment pipeline | Often integrates with analytics platforms |

| Result Analysis | Primarily developer-focused | Data-driven, statistically significant insights |

| Typical Tools | LaunchDarkly, Flagsmith, Unleash | Optimizely, VWO, Google Optimize |

Understanding Feature Toggles and A/B Testing: Key Differences

Feature toggles allow developers to enable or disable specific features in production without deploying new code, facilitating gradual rollouts and quick rollbacks. A/B testing tools compare user experiences by randomly serving different feature variations to measure impact on key metrics like conversion rates and user engagement. While feature toggles focus on operational control and release management, A/B testing emphasizes data-driven decision-making through statistical analysis of user behavior.

Core Use Cases: When to Use Feature Toggles vs. A/B Testing Tools

Feature toggles are ideal for enabling or disabling functionality in production without deploying new code, supporting continuous integration and rapid iteration in development workflows. A/B testing tools excel in evaluating user behavior by comparing variations to optimize UI/UX, conversion rates, or feature adoption. Choose feature toggles for deployment flexibility and testing tools for data-driven decision-making on user experience enhancements.

Technical Implementation: How Feature Toggles Compare to A/B Testing Tools

Feature toggles enable developers to activate or deactivate features dynamically within the codebase, offering granular control over functionality without redeploying applications. A/B testing tools, in contrast, rely on user segmentation and traffic allocation to compare different user experiences, often integrating with analytics platforms for performance measurement. Implementing feature toggles requires embedding conditional logic in the source code, while A/B testing tools typically operate externally, managing variations through configuration dashboards and tracking user behavior across cohorts.

Impact on Product Development Speed and Flexibility

Feature toggles enable rapid experimentation and iterative releases by allowing developers to activate or deactivate functionalities without deploying new code, directly enhancing product development speed. A/B testing tools provide precise user behavior insights through controlled experiments but may introduce delays due to data analysis and test duration requirements, impacting flexibility. Combining feature toggles with A/B testing optimizes both release agility and informed decision-making, boosting overall development efficiency.

Managing Risk: Rollouts, Experiments, and User Segmentation

Feature toggles enable controlled rollouts by selectively activating features for specific user segments, minimizing risk through gradual exposure and quick rollback capabilities. A/B testing tools facilitate experiments by dividing users into variants for performance comparison, optimizing user experience based on data-driven insights. Integrating robust user segmentation ensures precise targeting, enhancing risk management during feature deployment and experimentation phases.

Data and Metrics: Measuring Success in Feature Toggles and A/B Testing

Feature toggles enable real-time control over feature deployment, allowing precise measurement of feature-specific engagement and performance metrics within live user segments. A/B testing tools facilitate controlled experimentation by comparing variant performance through statistically significant data on user behavior, conversion rates, and retention metrics. Combining feature toggles with A/B testing allows teams to gather granular insights on feature impact, optimize user experience, and drive data-informed product decisions effectively.

Integration with Development Workflows and CI/CD Pipelines

Feature toggles integrate seamlessly with development workflows and CI/CD pipelines by enabling incremental code deployment and real-time feature activation without redeploying entire applications. A/B testing tools, while valuable for experimentation, often require additional setup and data analysis stages, making their integration with CI/CD pipelines more complex and less fluid than feature toggle systems. Efficient integration of feature toggles accelerates continuous delivery and improves developer productivity by providing controlled feature rollouts directly within automated build and deployment processes.

Challenges and Pitfalls: Feature Toggle Debt vs. Experiment Bias

Feature toggle debt accumulates when toggles are not promptly cleaned up, causing increased code complexity and technical debt that hampers maintainability and scalability. Experiment bias in A/B testing tools arises from skewed data due to improper randomization, sample size issues, or inconsistent user segmentation, leading to inaccurate conclusions and flawed decision-making. Both challenges impact product reliability and user experience, requiring rigorous management of toggles and robust statistical methodologies to ensure valid results.

Tooling Comparison: Leading Feature Toggle and A/B Testing Platforms

Leading feature toggle platforms such as LaunchDarkly, Flagsmith, and Unleash provide robust environments for controlled feature rollout and real-time configuration management, enabling fine-grained deployment strategies. In contrast, top A/B testing tools like Optimizely, VWO, and Google Optimize specialize in experimental design, traffic segmentation, and statistical analysis to drive data-informed user experience optimizations. Evaluating tooling choices depends on factors like development workflow integration, user targeting capabilities, and the desired balance between feature flag complexity and comprehensive testing analytics.

Choosing the Right Approach: Best Practices and Decision Criteria

Feature toggles enable controlled feature rollout by activating or deactivating specific functionalities for targeted user segments, optimizing deployment risk management and iterative development. A/B testing tools focus on comparing user experiences by delivering different variants to analyze behavior and conversion metrics, ideal for validating hypotheses with statistically significant data. Selecting the right approach depends on project goals, such as rapid feature iteration with toggles versus data-driven decision making with A/B testing, balanced by criteria like development complexity, user impact, and measurement precision.

Feature toggles vs A/B testing tools Infographic

productdif.com

productdif.com