Beta testing gathers real users' feedback on a near-final software version to identify bugs and usability issues before the official release. A/B testing compares two versions of a specific feature or interface by directing different user groups to each variant, measuring performance metrics to determine which option yields better results. Both testing methods enhance software quality but serve distinct purposes: beta testing ensures overall stability, while A/B testing optimizes user experience and functionality.

Table of Comparison

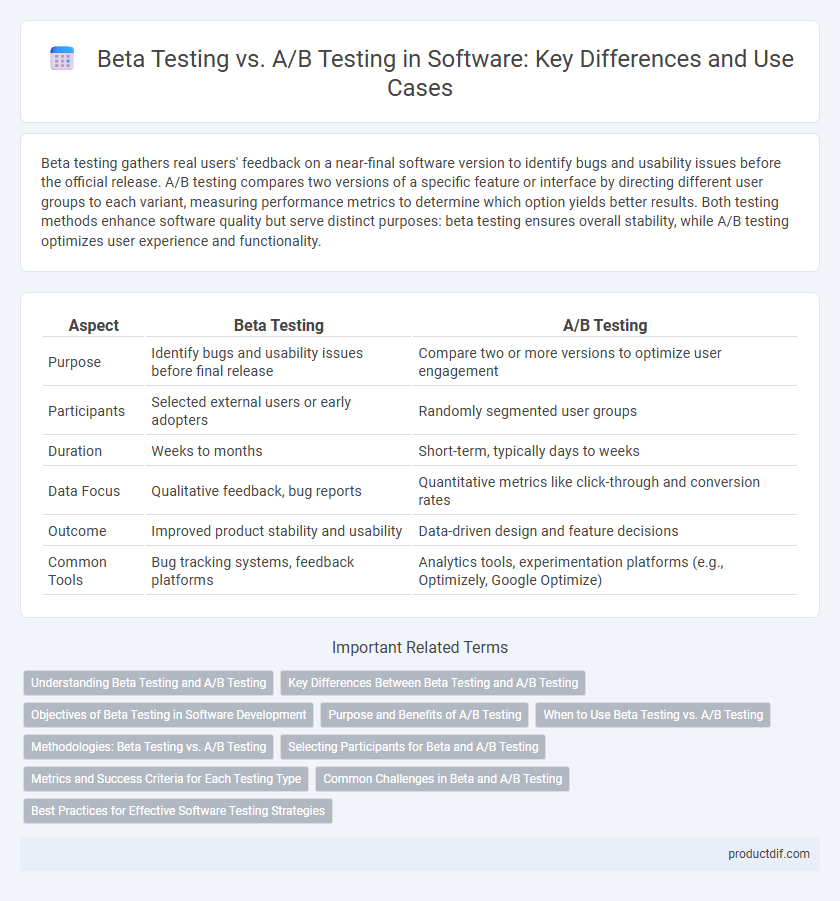

| Aspect | Beta Testing | A/B Testing |

|---|---|---|

| Purpose | Identify bugs and usability issues before final release | Compare two or more versions to optimize user engagement |

| Participants | Selected external users or early adopters | Randomly segmented user groups |

| Duration | Weeks to months | Short-term, typically days to weeks |

| Data Focus | Qualitative feedback, bug reports | Quantitative metrics like click-through and conversion rates |

| Outcome | Improved product stability and usability | Data-driven design and feature decisions |

| Common Tools | Bug tracking systems, feedback platforms | Analytics tools, experimentation platforms (e.g., Optimizely, Google Optimize) |

Understanding Beta Testing and A/B Testing

Beta testing involves releasing a software product to a limited group of external users to identify bugs and gather feedback before the official launch. A/B testing compares two versions of a software feature with different user segments to determine which performs better based on specific metrics. Both methods are critical for optimizing software quality and user experience by incorporating real-world data and user interactions.

Key Differences Between Beta Testing and A/B Testing

Beta testing involves releasing a software product to a limited audience outside the development team to identify bugs and usability issues before the official launch. A/B testing compares two or more variations of a feature or interface with real users to determine which version performs better based on specific metrics. While beta testing focuses on overall product quality and user feedback, A/B testing is centered on data-driven optimization of specific elements within the product.

Objectives of Beta Testing in Software Development

Beta testing in software development aims to identify bugs and usability issues by releasing the software to a limited group of end-users before the full launch. This phase collects real-world feedback to ensure the product meets user expectations and performs reliably under varied conditions. The primary objective is to validate functionality, improve user experience, and detect any critical defects that were not found during internal testing.

Purpose and Benefits of A/B Testing

A/B testing is a method used to compare two versions of a software feature or interface to determine which performs better based on user interactions, driving data-driven decision making. It enables precise measurement of user preferences by analyzing key performance indicators like click-through rates, conversion rates, and user engagement. The benefits of A/B testing include optimizing user experience, increasing conversion rates, and reducing risks by validating changes before full implementation.

When to Use Beta Testing vs. A/B Testing

Beta testing is ideal for evaluating a nearly complete software product with real users to identify bugs and usability issues before full release. A/B testing suits scenarios where specific features, designs, or content variations need data-driven comparisons to optimize user engagement or conversion rates. Choose beta testing for comprehensive feedback on overall functionality and A/B testing for targeted, measurable improvements in user experience.

Methodologies: Beta Testing vs. A/B Testing

Beta testing involves releasing a pre-release version of software to a select group of users to gather real-world feedback and identify bugs before the official launch. A/B testing splits users into two groups to compare different versions of a feature or interface, measuring performance through predefined metrics such as conversion rates or user engagement. While beta testing prioritizes comprehensive user experience validation, A/B testing focuses on data-driven decision making by isolating variables to optimize specific functionalities.

Selecting Participants for Beta and A/B Testing

Selecting participants for beta testing involves recruiting a diverse group of end-users who represent real-world usage scenarios to identify bugs and usability issues before the official software release. In contrast, A/B testing selects a statistically significant sample from the target user base to compare specific variations of features or designs, aiming to optimize user experience based on measured performance metrics. Effective participant selection in both testing methods ensures reliable feedback and data-driven decisions for software improvements.

Metrics and Success Criteria for Each Testing Type

Beta testing success metrics primarily include user engagement rates, defect detection counts, and qualitative feedback on usability, reflecting real-world application and user acceptance. A/B testing focuses on quantitative metrics such as conversion rates, click-through rates, and time-on-page, aiming to statistically validate the performance of variations against predefined business goals. Defining clear success criteria--error reduction for beta testing and optimization of key performance indicators for A/B testing--ensures targeted improvements and reliable product iterations.

Common Challenges in Beta and A/B Testing

Beta testing and A/B testing frequently encounter challenges related to participant selection, where beta testers may not represent the target user base while A/B test groups require strict randomization for valid results. Data interpretation issues arise from incomplete feedback in beta testing and statistical significance requirements in A/B testing, complicating decision-making. Both methods face difficulties in managing external variables that can skew performance metrics, reducing the reliability of feature evaluation.

Best Practices for Effective Software Testing Strategies

Beta testing involves releasing a nearly complete software version to a select group of users for real-world feedback, while A/B testing compares two versions to determine which performs better. Implementing best practices such as defining clear objectives, segmenting target audiences, and collecting quantitative and qualitative data ensures accurate insights during both testing phases. Prioritizing iterative analysis and timely adjustments improves software quality, user experience, and decision-making efficiency in deployment strategies.

Beta Testing vs A/B Testing Infographic

productdif.com

productdif.com