Edge AI processing enhances electronic pet devices by enabling real-time data analysis directly on the device, reducing latency and improving responsiveness for interactive features. Cloud AI processing offers extensive computational power and storage, supporting complex algorithms and continuous model updates but relies on stable internet connectivity. Choosing between edge and cloud AI impacts performance, privacy, and user experience in electronic pets.

Table of Comparison

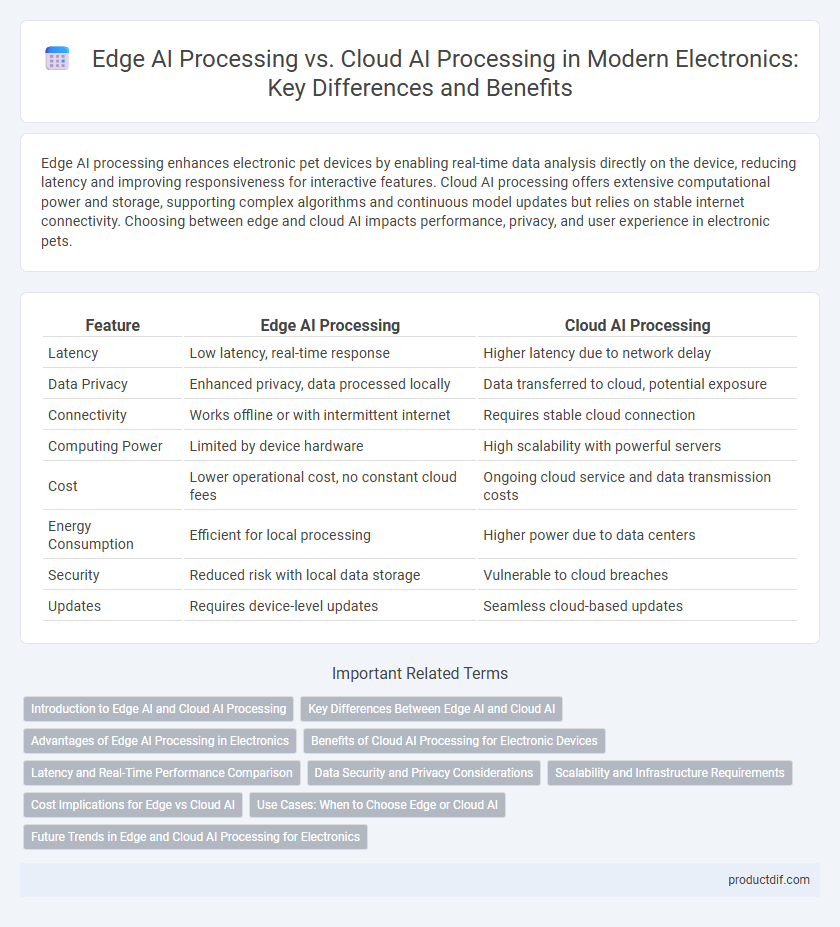

| Feature | Edge AI Processing | Cloud AI Processing |

|---|---|---|

| Latency | Low latency, real-time response | Higher latency due to network delay |

| Data Privacy | Enhanced privacy, data processed locally | Data transferred to cloud, potential exposure |

| Connectivity | Works offline or with intermittent internet | Requires stable cloud connection |

| Computing Power | Limited by device hardware | High scalability with powerful servers |

| Cost | Lower operational cost, no constant cloud fees | Ongoing cloud service and data transmission costs |

| Energy Consumption | Efficient for local processing | Higher power due to data centers |

| Security | Reduced risk with local data storage | Vulnerable to cloud breaches |

| Updates | Requires device-level updates | Seamless cloud-based updates |

Introduction to Edge AI and Cloud AI Processing

Edge AI processing involves performing data analysis and machine learning computations directly on local devices such as sensors, smartphones, or embedded systems, significantly reducing latency and enhancing real-time decision-making. Cloud AI processing relies on centralized data centers with vast computational resources to handle large-scale data processing, model training, and complex analytics, enabling more powerful AI capabilities but with increased data transmission times. Both methods play crucial roles in electronics, where edge AI supports immediate, low-bandwidth applications and cloud AI facilitates intensive, large-volume computing tasks.

Key Differences Between Edge AI and Cloud AI

Edge AI processing occurs locally on devices such as sensors, smartphones, or embedded systems, offering low latency, enhanced data privacy, and reduced bandwidth usage by processing data on-site. Cloud AI processing relies on centralized data centers with significant computational power, facilitating large-scale data analysis and model training but often incurring higher latency and dependency on stable internet connections. Key differences include data processing location, response time, privacy, and infrastructure requirements, with Edge AI ideal for real-time applications and Cloud AI suited for complex, resource-intensive tasks.

Advantages of Edge AI Processing in Electronics

Edge AI processing in electronics enables real-time data analysis with minimal latency by performing computations locally on devices like sensors and smartphones. This local processing enhances security and privacy by reducing data transmission to external servers, minimizing vulnerability to breaches. Additionally, Edge AI lowers bandwidth usage and operational costs, making it ideal for applications in autonomous vehicles, industrial automation, and smart home devices.

Benefits of Cloud AI Processing for Electronic Devices

Cloud AI processing enhances electronic devices by providing scalable computational power, enabling complex data analysis without local hardware constraints. This approach reduces device cost and energy consumption, as intensive AI tasks are offloaded to external servers. Cloud AI also ensures real-time updates and continuous learning, improving device performance and adaptability over time.

Latency and Real-Time Performance Comparison

Edge AI processing significantly reduces latency by performing data analysis directly on local devices, enabling real-time decision-making crucial for applications such as autonomous vehicles and industrial automation. Cloud AI processing, while offering extensive computational power and scalability, experiences higher latency due to data transmission over networks, which can hinder instantaneous response times. Real-time performance is thus superior in Edge AI, providing faster inference and lower dependence on internet connectivity compared to cloud-based AI solutions.

Data Security and Privacy Considerations

Edge AI processing enhances data security and privacy by performing computations locally on devices, minimizing the transmission of sensitive information over networks. Cloud AI processing relies on centralized servers, which can introduce vulnerabilities due to data being stored and processed off-site, increasing exposure to potential breaches. Implementing edge AI reduces latency and limits unauthorized access risks by keeping personal and confidential data within the device environment.

Scalability and Infrastructure Requirements

Edge AI processing enhances scalability by enabling local data computation, reducing latency, and minimizing bandwidth usage, which decreases reliance on expansive cloud infrastructure. Cloud AI processing supports high scalability through elastic resource allocation but demands robust, high-capacity data centers and continuous network connectivity. Edge AI reduces infrastructure costs by distributing computing power across devices, whereas cloud AI centralizes workloads, requiring costly, scalable server farms and extensive network infrastructure.

Cost Implications for Edge vs Cloud AI

Edge AI processing significantly reduces data transmission costs by analyzing information locally, minimizing reliance on expensive cloud bandwidth and lowering latency expenses. Cloud AI processing incurs higher operational costs due to continuous data uploads, storage fees, and extensive computational resource charges on cloud platforms. Deploying Edge AI can lead to cost savings in real-time applications by decreasing cloud service dependency and optimizing energy consumption within electronic devices.

Use Cases: When to Choose Edge or Cloud AI

Edge AI processing excels in scenarios requiring real-time data analysis and low latency, such as autonomous vehicles, industrial automation, and healthcare monitoring devices. Cloud AI processing is preferable for applications demanding vast computational resources and large-scale data aggregation, including predictive analytics, natural language processing, and large-scale recommendation systems. Choosing between Edge and Cloud AI depends on factors like latency sensitivity, bandwidth availability, data privacy requirements, and computational power needs.

Future Trends in Edge and Cloud AI Processing for Electronics

Edge AI processing in electronics will increasingly leverage advanced neural network accelerators and low-power AI chips to enable real-time data analysis and enhanced privacy at the device level. Cloud AI processing will continue expanding through scalable infrastructure, vast datasets, and high-performance computing, delivering complex model training and large-scale analytics. Future trends emphasize a hybrid approach that combines edge responsiveness with cloud computational power to optimize efficiency, reduce latency, and enhance security in electronic devices.

Edge AI Processing vs Cloud AI Processing Infographic

productdif.com

productdif.com